What is Alert Fatigue in DevOps and How to Combat It With the Help of ilert

You may have a team chat where automatic alerts fall in great numbers daily. Although these alerts are meant to notify you of issues, they often go unnoticed as you scroll through dozens of them. When we talk about IT alerts, things are getting even more complicated because they include many technical details you must decipher. This is one of many simple examples of alert fatigue. The story of a boy who cried wolf had a bad ending, and not to repeat that tragic legend, let's dive deeper into alert fatigue and how DevOps people can avoid it.

What does alert fatigue mean?

Alert fatigue, also known as alarm or warning fatigue, is a phenomenon that occurs when individuals are exposed to a large number of frequent alerts or notifications, leading them to become desensitized to these warnings. This desensitization often reduces response to alerts, including slower reaction times, missed alerts, or completely ignoring essential alarms. Alert fatigue is a significant concern in various fields, such as healthcare, aviation, and IT, including DevOps and SRE (Site Reliability Engineering) teams.

What are the consequences of alert fatigue?

Warning fatigue was cited as a contributing factor to the 49 deaths caused by Hurricane Ida. Several notifications were sent to phones warning people about imminent dangerous weather conditions a few days before Ida hit the American Northeast. These critical alerts were lost amidst a clutter of other phone notifications and consequently went unnoticed. That is an extreme outcome example, but it's not unique and not the only time alert fatigue led to catastrophe. A false sense of security is one of the alert overload fallouts.

In IT, high volumes of alerts are also becoming more and more common as more IT systems are introduced in companies' tech stacks. A 2021 study revealed that companies failed to investigate or outright ignored approximately 30% of cybersecurity alerts. System downtime, security breaches, reputational damage, increased operational costs — a massive list of potential risks arise when systems produce unmanageable alerts.

There are also severe outcomes on team and individual levels. The psychological and emotional toll of dealing with a relentless flood of alerts can lead to burnout among IT staff. This can result in decreased job satisfaction, reduced performance, and higher turnover rates as employees seek less stressful work environments. Additionally, constant interruptions from non-critical alerts can disrupt workflow and focus, lowering overall productivity. IT professionals may spend excessive time managing alerts rather than working on proactive measures or strategic projects.

What are the reasons for a redundant amount of alerts?

Now that we understand the negative impact of alert or warning fatigue, where does it originate from? In the context of DevOps and IT, alert fatigue typically arises when systems and tools generate an excessive number of alerts, many of which may be false alarms or not urgent.

Here are ten reasons why IT teams end up in alert fatigue

- Changes in the environment. Frequent changes in the IT environment, such as updates, new deployments, or configuration changes, can inadvertently increase the number of alerts.

- Overly sensitive thresholds. Monitoring tools configured with thresholds that are too sensitive can trigger alerts for minor issues or normal fluctuations, leading to an excessive number of notifications.

- Lack of alert prioritization. Without proper prioritization, all alerts are treated as equally important, resulting in a large volume of alerts, including many that are not urgent or critical.

- Redundant monitoring tools. Using multiple tools that monitor the same systems or metrics can lead to duplicate alerts for the same issue.

- Poorly defined alert criteria. Alerts set up without specific or clear criteria can trigger notifications for events that are not actually indicative of problems.

- Inefficient alert filtering and aggregation. A lack of effective filtering and aggregation mechanisms results in numerous alerts being generated for events that could be consolidated into a single notification.

- Lack of regular review and optimization. Failing to review and optimize alert configurations regularly can result in outdated or irrelevant alerts being triggered.

- Inadequate incident management processes. Without effective incident management, alerts may continue to be generated for known issues that are already being addressed.

- Lack of contextual awareness. Alerts generated without considering the context, such as time of day or related events, can contribute to the volume of unnecessary alerts.

- Ineffective alert escalation rules. Poorly designed escalation rules can lead to alerts being sent to too many people or repeatedly sent without resolution.

While frequent deployment is a positive sign of a fast-paced and evolving system, all other points represent areas for improvement.

How to combat alert fatigue with ilert?

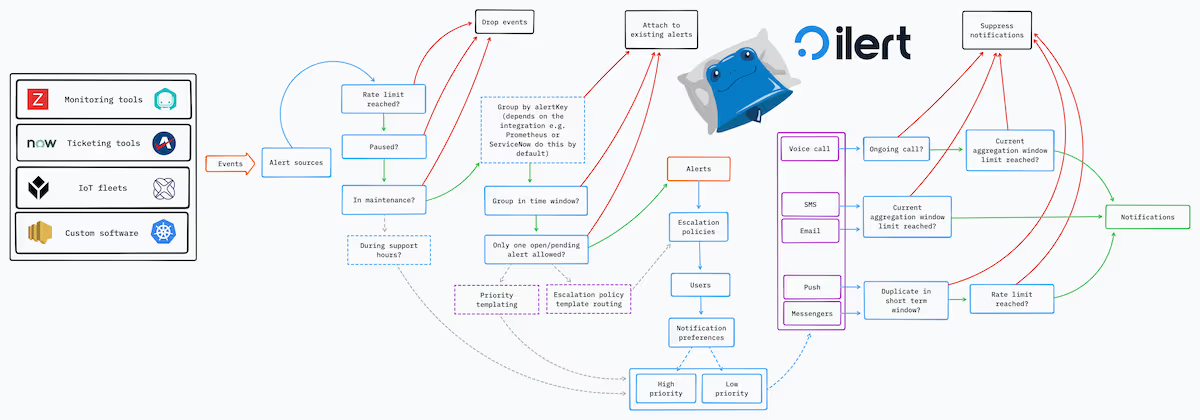

Your incident management platform is a key location where many issues can be addressed. Before delving into specific recommendations, it's important to note that ilert plays a crucial role in preventing an overwhelming cascade of alerts. The illustration below maps the core ilert flow between receiving events and sending notifications and shows where rate limits, aggregations, and suppressions may apply. ilert never simply "drops" a notification due to rate limiting or suppression, and you will always see an entry for the notification (no matter its state) in the related alert's timeline.

Here are a few recommendations on how to keep your team productive and alerted only when it's needed.

Use various escalation policies. Escalation policies define whom to assign an alert to when it is triggered by a monitoring tool. Every policy is a set of rules that specifies a notification target — a user or a schedule — and an escalation timeout. If you have many monitoring tools that oversee different aspects of your system, it's better not to use the same policy for all of them and specify the rules depending on team members' expertise, working hours, etc.

Prioritize alerts. It's crucial to identify high-priority alerts and distinguish them from less urgent ones. Use different notification priorities for different alert sources in ilert by choosing one of four options in Alert source settings.

Provide context for the alerts. The more information you receive from the very beginning, the easier it will be to identify the source of the problem. In ilert, you can customize alert summary and details depending on alert payload and provide links. You can learn more about alert templating and syntax in the documentation.

Use alert grouping. By ticking alert grouping option in alert source settings, you give permission to ilert to cluster related alerts within a defined time window. Or, as another option, to open only one alert per specified monitoring tool and aggregate subsequent ones until the alert is either accepted or resolved.

Establish support hours. The support hours feature defines notification priority based on personnel availability. "Quiet times," when teams or individual members can ignore non-essential alerts, are important for long-term productivity.

Avoid gaps in on-call schedules. If an alert occurs during a time with no coverage in your schedule, then the alert will be escalated immediately to the next escalation level, bypassing the usual waiting period for the escalation timeout. If no one is available on the entire escalation policy, no one will be notified, thus the alert will be overlooked.

Alert fatigue is easier to prevent than to diminish

Regularly reviewing your monitoring and alerting system cannot be overestimated, as it's much easier to prevent alert fatigue than to reduce it once it's established. A holistic understanding of the system's alerts could lead to better interactions, less frustration, and improved response times.

Read more on ilert blog:

Mastering IT Alerting: A Short Guide for DevOps Engineers