EU AI Act: what changes in August 2025 and how to prepare

.avif)

On August 2, 2025, a key part of the EU AI Act comes into force. It has serious implications for how you manage incidents related to artificial intelligence.

While the full regulation will not apply until 2026, new obligations for providers of general-purpose AI (GPAI) models begin this summer. If you are building or deploying AI-powered services in Europe, the clock is ticking.

The good news is that if you already have a structured incident response process, you are more prepared than you think. But staying compliant and avoiding penalties will require some important updates to how incidents are detected, documented, and communicated across your organisation.

In this blog, we’ll break down:

- What exactly is changing in August 2025.

- How organized incident response fits into the EU AI Act timeline.

- What high-risk and general-purpose AI obligations actually mean.

- And how ilert helps teams stay compliant.

What is the EU AI Act, and who does it apply to?

The EU AI Act is the world’s first comprehensive regulatory framework for artificial intelligence. Its main objective is to ensure that AI systems used in the European Union are safe, transparent, and respect fundamental rights.

Adopted in 2024, the Act uses a risk-based approach to classify AI systems into four levels: unacceptable, high, limited, and minimal risk, with specific requirements for each.

The Act applies to a broad range of actors in the AI value chain, including:

- AI system providers (developers or vendors).

- Deployers (organisations using AI systems).

- Importers and distributors of AI technologies.

- Even some downstream users in the EU, regardless of whether the provider is based in the EU or not.

In other words, if your company offers or operates AI-powered services in the EU, especially in areas defined as "high-risk" (like recruitment, healthcare, or finance), you are likely subject to compliance obligations.

To determine this, assess whether your AI system affects safety, rights, or critical services under the AI Act’s risk categories.

The AI Act also affects developers of general-purpose AI models (GPAI) such as LLMs. From August 2025, these providers will need to meet new transparency, documentation, and risk mitigation requirements.

What changes in August 2025

Starting August 2, 2025, new rules under the AI Act take effect for providers of general-purpose AI (GPAI) models, including large language models (LLMs).

According to the European Commission, GPAI providers must comply with the following requirements:

- Transparency: Ensuring users are explicitly informed when content has been generated by an AI system.

- Data disclosures: Publishing a public summary of training data sources and processing methods.

- Risk mitigation: Assessing and reducing systemic risks from powerful AI models.

What obligations are already in effect?

As of February 2, 2025, the EU AI Act has already brought two key sets of obligations into effect:

1. Banned AI practices (unacceptable risk)

The AI Act prohibits a list of AI systems considered to pose an unacceptable risk to safety, human rights, or democratic values. These include:

- Social scoring by public authorities.

- Real-time biometric identification (e.g., facial recognition in public spaces).

- AI systems that manipulate behaviour or exploit vulnerabilities.

- Emotion recognition in workplaces or educational settings.

- Predictive policing based on profiling or past offences.

- Untargeted scraping of images or videos to build biometric databases.

2. AI literacy obligations

The Act also introduces AI literacy requirements for providers and deployers. This includes:

- Ensuring those who use or oversee AI systems are trained to understand how the system works.

- Recognising biases, risks, and limitations.

- Knowing how to monitor and intervene when needed.

These rules are designed to increase awareness and safe use of AI across industries, even before high-risk systems face stricter rules in 2026.

How does incident management play a central role in EU AI Act compliance?

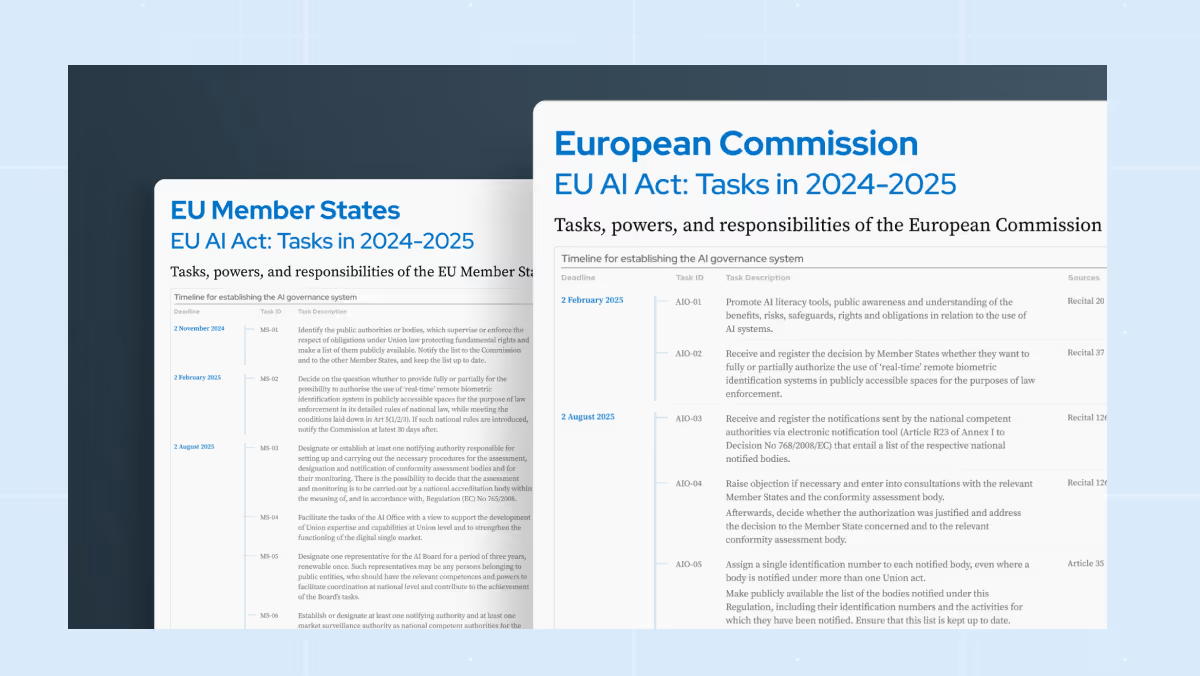

The EU AI Act compliance centres on five operational duties. We have mapped each duty to its article in the regulation and paired it with a clear next step for incident-response teams.

Automatic event logs (Article 12)

Providers of high-risk AI must keep tamper-proof logs so authorities can reconstruct system behaviour.

Next step: enable machine-generated timelines that capture every alert, escalation, rollback and mitigation action, then export those logs in a regulator-ready format.

Seventy-two-hour notification (Article 73)

Serious incidents or malfunctions must be reported within seventy-two hours of becoming aware of them.

Next step: use playbooks that notify engineering, legal and communications at the same time so reporting can start while the incident is still unfolding.

Live cross-functional visibility (Article 73 §4)

Regulators expect clear roles and responsibilities during an incident.

Next step: give legal, security and leadership real-time access to the incident timeline and provide controlled status page updates so external stakeholders receive verified information without extra meetings.

Automated post-incident evidence (Article 73 §4)

Records must be stored for inspection and include description, impact, corrective measures and affected parties.

Next step: generate a post-incident report automatically from the live timeline, then add impact analysis and follow-up actions so every report contains the same compliance fields.

Continuous risk mitigation for GPAI (Article 55)

Providers of general-purpose AI models must assess and mitigate systemic risks on an ongoing basis.

Next step: integrate monitoring signals such as model-output drift or inference-error spikes so threshold breaches automatically open an incident and trigger the steps above.

Because the AI Act shares principles with GDPR, NIS2 and DORA, timely notifications, transparent documentation and clear accountability, along with capturing all incident data in one workflow, let you satisfy multiple regulations with the same evidence set.

How ilert meets these requirements

Automatic logs – every alert, escalation and response action is stored in a tamper-proof timeline you can export for regulators.

Fast notification – multi-channel alerting and playbooks notify engineering, legal and comms at once, supporting the 72-hour rule.

Cross-team visibility – role-based views and status pages keep security and leadership informed without extra meetings.

Post-incident evidence – one click turns the live timeline into an audit-ready post-mortem with impact, measures and follow-ups.

.avif)

Closing thoughts

The EU AI Act isn’t just another compliance checkbox. It’s a signal that organisations need to rethink how they manage risk in an AI-powered world. For companies deploying or building high-risk AI systems, strong incident response practices are no longer optional. They’re essential.

Whether you’re preparing for the August 2025 requirements or the full rollout in 2026, the key is to embed compliance into your operational workflows, not bolt it on later. With tools like ilert, much of this is already within reach: fully-automated alerting and escalation, cross-team coordination, real-time documentation, and audit-ready postmortems.

The best part? When incident response is done right, compliance becomes a natural by-product, not a burden.

Quick summary

The EU AI Act introduces strict incident reporting obligations, with some rules already active as of February 2025. By August 2025, providers of general-purpose AI models must meet new requirements around transparency, safety, and copyright.

With Article 73 enforcing a 72-hour reporting window for high-risk incidents, having a structured, automated incident response process in place is the most efficient way to stay compliant. ilert makes this achievable by helping teams document incidents in real time, streamline cross-functional collaboration, and reduce the overhead of regulatory reporting.